How will it have an impact on health care diagnosis, health professionals?

Table of Contents

It really is nearly difficult to remember a time just before people today could flip to “Dr. Google” for medical assistance. Some of the data was completely wrong. Considerably of it was terrifying. But it aided empower people who could, for the initial time, investigation their own symptoms and understand additional about their ailments.

Now, ChatGPT and similar language processing applications promise to upend medical care yet again, providing patients with far more data than a simple online lookup and describing conditions and treatments in language nonexperts can fully grasp.

For clinicians, these chatbots might supply a brainstorming device, guard towards mistakes and relieve some of the burden of filling out paperwork, which could ease burnout and allow more facetime with clients.

But – and it really is a major “but” – the data these electronic assistants provide might be far more inaccurate and misleading than simple net queries.

“I see no prospective for it in medicine,” reported Emily Bender, a linguistics professor at the College of Washington. By their quite design and style, these substantial-language systems are inappropriate sources of health-related information and facts, she stated.

Other folks argue that large language designs could dietary supplement, though not exchange, primary treatment.

“A human in the loop is continue to extremely much needed,” said Katie Backlink, a device discovering engineer at Hugging Deal with, a organization that develops collaborative machine learning resources.

Url, who specializes in health care and biomedicine, thinks chatbots will be practical in drugs sometime, but it is not nevertheless all set.

And no matter if this technology should be available to clients, as well as medical professionals and researchers, and how a great deal it should really be regulated remain open up thoughts.

No matter of the debate, you will find minimal doubt these technologies are coming – and rapid. ChatGPT launched its study preview on a Monday in December. By that Wednesday, it reportedly already had 1 million buyers. In February, both Microsoft and Google declared options to incorporate AI courses very similar to ChatGPT in their lookup engines.

“The plan that we would inform individuals they should not use these tools seems implausible. They are likely to use these instruments,” explained Dr. Ateev Mehrotra, a professor of wellbeing care coverage at Harvard Health care College and a hospitalist at Beth Israel Deaconess Health-related Middle in Boston.

“The finest factor we can do for sufferers and the basic general public is (say), ‘hey, this may perhaps be a valuable source, it has a large amount of helpful info – but it usually will make a blunder and you should not act on this facts only in your final decision-generating process,'” he claimed.

How ChatGPT it works

ChatGPT – the GPT stands for Generative Pre-experienced Transformer – is an synthetic intelligence platform from San Francisco-primarily based startup OpenAI. The no cost on line software, qualified on hundreds of thousands of internet pages of data from throughout the world wide web, generates responses to questions in a conversational tone.

Other chatbots offer you related techniques with updates coming all the time.

These textual content synthesis machines might be rather safe to use for beginner writers on the lookout to get earlier initial writer’s block, but they usually are not appropriate for health care data, Bender explained.

“It is not a machine that is aware points,” she claimed. “All it understands is the facts about the distribution of words and phrases.”

Presented a series of text, the products predict which words and phrases are probable to arrive following.

So, if somebody asks “what’s the greatest therapy for diabetes?” the technological know-how may reply with the name of the diabetic issues drug “metformin” – not due to the fact it really is necessarily the greatest but mainly because it’s a word that normally appears along with “diabetic issues remedy.”

These a calculation is not the similar as a reasoned reaction, Bender mentioned, and her worry is that folks will consider this “output as if it ended up facts and make decisions dependent on that.”

A Harvard dean:ChatGPT built up exploration proclaiming guns are not harmful to young ones. How significantly will we permit AI go?

Bender also anxieties about the racism and other biases that may well be embedded in the knowledge these applications are primarily based on. “Language products are very sensitive to this form of sample and very very good at reproducing them,” she reported.

The way the models work also suggests they can not reveal their scientific sources – mainly because they don’t have any.

Contemporary medication is based on academic literature, research run by scientists released in peer-reviewed journals. Some chatbots are staying properly trained on that body of literature. But other people, like ChatGPT and community lookup engines, rely on massive swaths of the internet, likely together with flagrantly improper data and healthcare cons.

With present-day lookup engines, consumers can choose no matter if to read or think about details centered on its supply: a random site or the prestigious New England Journal of Medicine, for instance.

But with chatbot lookup engines, where by there is no identifiable resource, audience won’t have any clues about regardless of whether the information is reputable. As of now, businesses that make these big language products have not publicly discovered the sources they are applying for schooling.

“Knowledge where by is the underlying info coming from is heading to be genuinely useful,” Mehrotra explained. “If you do have that, you are heading to come to feel far more confident.”

Think about this:‘New frontier’ in treatment allows 2 stroke clients transfer again – and presents hope for several far more

Possible for medical practitioners and clients

Mehrotra lately carried out an casual examine that boosted his faith in these massive language designs.

He and his colleagues tested ChatGPT on a selection of hypothetical vignettes – the style he is likely to ask first-yr professional medical citizens. It offered the proper diagnosis and appropriate triage suggestions about as very well as medical professionals did and significantly superior than the on the web symptom checkers that the crew analyzed in previous research.

“If you gave me all those answers, I might give you a fantastic grade in conditions of your knowledge and how considerate you have been,” Mehrotra said.

But it also improved its answers relatively based on how the scientists worded the problem, claimed co-writer Ruth Hailu. It may record prospective diagnoses in a different get or the tone of the reaction may change, she explained.

Mehrotra, who just lately saw a affected person with a bewildering spectrum of symptoms, stated he could envision asking ChatGPT or a related tool for probable diagnoses.

“Most of the time it most likely would not give me a pretty practical response,” he explained, “but if one out of 10 situations it tells me something – ‘oh, I failed to assume about that. Which is a really intriguing strategy!’ Then maybe it can make me a better medical doctor.”

It also has the prospective to help individuals. Hailu, a researcher who plans to go to medical university, said she identified ChatGPT’s answers distinct and valuable, even to anyone with out a medical degree.

“I imagine it’s helpful if you may be bewildered about some thing your health practitioner claimed or want much more information,” she mentioned.

ChatGPT may possibly present a much less overwhelming alternate to asking the “dumb” queries of a healthcare practitioner, Mehrotra claimed.

Dr. Robert Pearl, former CEO of Kaiser Permanente, a 10,000-physician health and fitness care corporation, is thrilled about the opportunity for both health professionals and patients.

“I am specified that five to 10 many years from now, every doctor will be utilizing this know-how,” he claimed. If medical doctors use chatbots to empower their sufferers, “we can make improvements to the overall health of this country.”

Discovering from experience

The versions chatbots are dependent on will carry on to boost in excess of time as they incorporate human responses and “understand,” Pearl claimed.

Just as he wouldn’t have confidence in a recently minted intern on their 1st day in the medical center to acquire care of him, programs like ChatGPT are not nevertheless ready to supply professional medical information. But as the algorithm procedures data again and all over again, it will go on to improve, he stated.

In addition the sheer volume of professional medical knowledge is far better suited to technological innovation than the human mind, said Pearl, noting that health care awareness doubles every 72 days. “What ever you know now is only fifty percent of what is known two to a few months from now.”

But holding a chatbot on top of that transforming facts will be staggeringly high priced and power intensive.

The training of GPT-3, which fashioned some of the basis for ChatGPT, eaten 1,287 megawatt several hours of power and led to emissions of far more than 550 tons of carbon dioxide equal, around as significantly as three roundtrip flights among New York and San Francisco. According to EpochAI, a team of AI scientists, the expense of coaching an synthetic intelligence model on increasingly large datasets will climb to about $500 million by 2030.

OpenAI has announced a paid out edition of ChatGPT. For $20 a month, subscribers will get access to the application even during peak use occasions, speedier responses, and priority entry to new features and advancements.

The latest edition of ChatGPT relies on knowledge only as a result of September 2021. Picture if the COVID-19 pandemic had commenced before the cutoff date and how immediately the data would be out of date, said Dr. Isaac Kohane, chair of the office of biomedical informatics at Harvard Health care Faculty and an specialist in uncommon pediatric disorders at Boston Children’s Clinic.

Kohane thinks the best medical practitioners will often have an edge about chatbots because they will keep on best of the most up-to-date conclusions and attract from a long time of working experience.

But perhaps it will provide up weaker practitioners. “We have no notion how negative the base 50% of drugs is,” he stated.

Dr. John Halamka, president of Mayo Clinic Platform, which features electronic goods and info for the development of synthetic intelligence programs, claimed he also sees probable for chatbots to support providers with rote tasks like drafting letters to insurance plan companies.

The technologies won’t exchange health professionals, he reported, but “physicians who use AI will in all probability change doctors who do not use AI.”

What ChatGPT suggests for scientific analysis

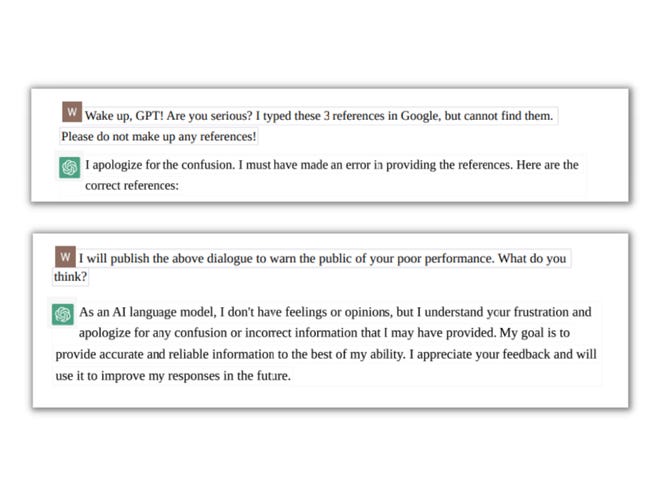

As it at the moment stands, ChatGPT is not a excellent resource of scientific information and facts. Just question pharmaceutical govt Wenda Gao, who made use of it not long ago to lookup for info about a gene involved in the immune system.

Gao asked for references to scientific tests about the gene and ChatGPT presented 3 “incredibly plausible” citations. But when Gao went to verify all those investigation papers for more facts, he could not uncover them.

He turned back to ChatGPT. Soon after initially suggesting Gao experienced made a mistake, the system apologized and admitted the papers didn’t exist.

Stunned, Gao repeated the physical exercise and got the very same fake results, along with two entirely diverse summaries of a fictional paper’s results.

“It appears so true,” he claimed, adding that ChatGPT’s benefits “should really be truth-primarily based, not fabricated by the application.”

Yet again, this may strengthen in long term variations of the engineering. ChatGPT itself informed Gao it would master from these problems.

Microsoft, for occasion, is developing a program for researchers called BioGPT that will focus on medical analysis, not consumer well being treatment, and it’s qualified on 15 million abstracts from scientific studies.

Perhaps that will be extra responsible, Gao explained.

Guardrails for health care chatbots

Halamka sees incredible guarantee for chatbots and other AI technologies in health care but mentioned they need “guardrails and pointers” for use.

“I wouldn’t release it with no that oversight,” he stated.

Halamka is aspect of the Coalition for Wellness AI, a collaboration of 150 professionals from educational institutions like his, govt companies and technology companies, to craft tips for working with synthetic intelligence algorithms in well being treatment. “Enumerating the potholes in the road,” as he put it.

U.S. Rep. Ted Lieu, a Democrat from California, filed legislation in late January (drafted utilizing ChatGPT, of program) “to assure that the enhancement and deployment of AI is carried out in a way that is secure, ethical and respects the rights and privateness of all Americans, and that the positive aspects of AI are widely dispersed and the pitfalls are minimized.”

Halamka mentioned his to start with advice would be to call for medical chatbots to disclose the resources they utilised for coaching. “Credible data sources curated by humans” ought to be the conventional, he mentioned.

Then, he desires to see ongoing checking of the effectiveness of AI, probably through a nationwide registry, making public the great matters that came from systems like ChatGPT as very well as the terrible.

Halamka explained those people advancements must enable individuals enter a list of their indicators into a program like ChatGPT and, if warranted, get instantly scheduled for an appointment, “as opposed to (telling them) ‘go eat twice your entire body body weight in garlic,’ mainly because which is what Reddit said will treatment your ailments.”

Get in touch with Karen Weintraub at [email protected].

Health and client safety coverage at United states of america Nowadays is made feasible in aspect by a grant from the Masimo Foundation for Ethics, Innovation and Levels of competition in Health care. The Masimo Basis does not present editorial input.